Terraform automation: Virtual/physical network (Cumulus Network)

To provide North/South connectivity and test routing I am deploying predefined Virtual Top of Rack switches with Layer 3 Routing capabilities and a single “Core” router.

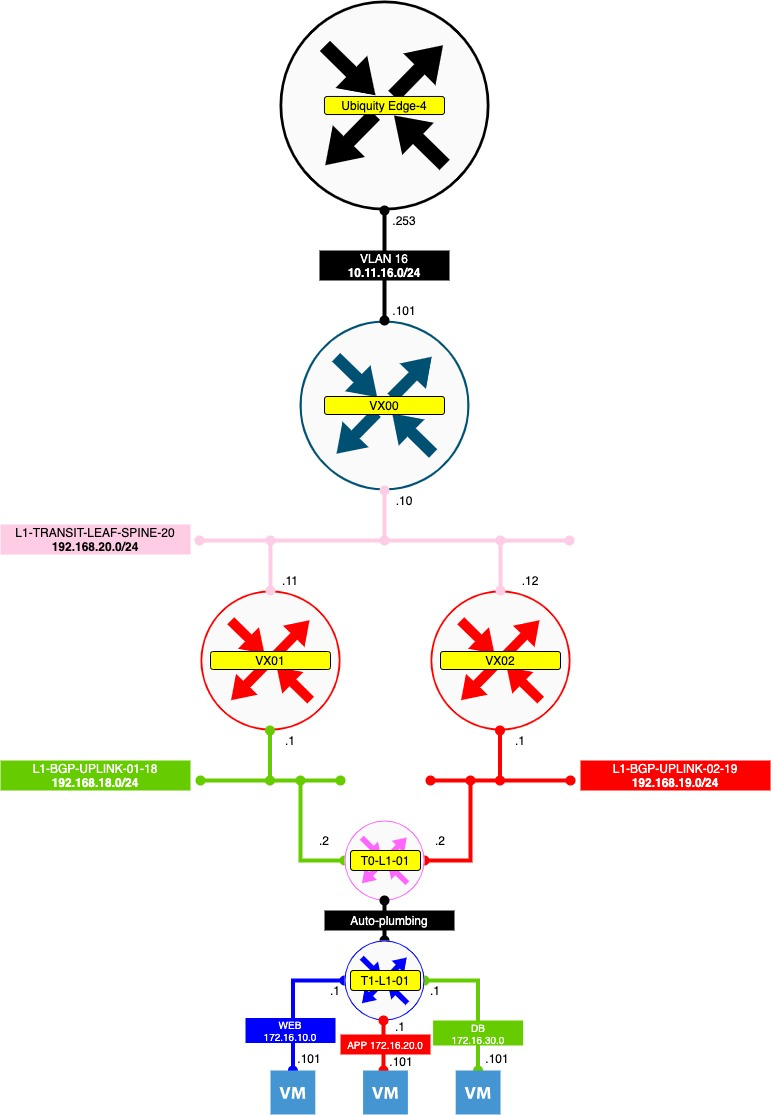

The best way to think of this is in levels:

- LEVEL 1 (INFRA)

- My physical network infrastructure consisting of my infra-network and my infra-NSXT-network.

- LEVEL 2 (INFRA)

- The “virtual” network infrastructure of the Lab Tenant (in this case lab 1) to simulate a physical network with a Core Router and two Top of Rack Switches.

- The underlay networking that I am using is NSX-T as well that is part of my infra-NSXT-network

- LEVEL 3 (VIRTUAL)

- The “virtual” NSXT network infrastructure living inside the Lab Tenant (in this case lab 1)

- These components and NSX-T network segments are part of the virtual-NSXT-network specifically for the Lab Tenant (in this case lab 1)

The drawing below will give you an overview of how the networking is done specifically for Lab 1.

A description of what device does what can be found in the table below:

- Ubiquity Edge 4

- This is my physical internal home router that does all the routing and connectivity between by home networks and the internet

- VX00

- This is a virtual core router that is part of a certain lab (in this case lab1) that will simulate the core network of the Lab Tenant.

- This Virtual machine is a Cumulus VX Router that will be preconfigured with with the connect IP addresses and BGP peers.

- This Virtual machine will be cloned from a template using terraform

- VX01

- This is a virtual core router that is part of a certain lab (in this case lab1) that will simulate the first Top of Rack switch of the Lab Tenant.

- This Virtual machine is a Cumulus VX Router that will be preconfigured with with the connect IP addresses and BGP peers.

- This Virtual machine will be cloned from a template using terraform/

- VX01

- This is a virtual core router that is part of a certain lab (in this case lab1) that will simulate the second Top of Rack switch of the Lab Tenant.

- This Virtual machine is a Cumulus VX Router that will be preconfigured with with the connect IP addresses and BGP peers.

- This Virtual machine will be cloned from a template using terraform

- T0-L1-01

- This is the T0 Gateway that is managed by the Nested NSX-T Manager within Lab 1

- T1-L1-01

- This is the T1 Gateway that is managed by the Nested NSX-T Manager within Lab 1

- This T1 Gateway will host nested networks that live inside the lab Tenant (In this case lab1)

Now the whole point of doing it like this is that I can create different lab tenants all using a different kind of NSX-T version, or maybe even test a specific NSX-T version with another vSphere version.

Using Terraform and pre-defined template virtual machines (VX00, VC01 and VX02) allows me to quickly set up complete SDDC environments with Terraform and also tear them down when I no longer need this.

The latest version of the Cumulus VX deployment image can be downloaded here.

Make sure you download the VMware OVA image. After the download it is time to deploy the image. For the first image I used the name “VX00-template". During the deployment wizard you can only select the network for one single network interface. After the deployment you will see that the Cumulus Virtual Router VM has 4 vNIC interfaces.

I will make the following vNIC to Port Group mapping in order to create the above topology in the picture above:

VX00 Network adapter 1 <==> L1-APP-MGMT-11 Network adapter 2 <==> SEG-VLAN16 Network adapter 3 <==> L1-TRANSIT-LEAF-SPINE-20 Network adapter 4 <==> VM Network (not used)

VX01 Network adapter 1 <==> L1-APP-MGMT-11 Network adapter 2 <==> L1-TRANSIT-LEAF-SPINE-20 Network adapter 3 <==> L1-BGP-UPLINK-01-18 Network adapter 4 <==> VM Network (not used)

VX02 Network adapter 1 <==> L1-APP-MGMT-11 Network adapter 2 <==> L1-TRANSIT-LEAF-SPINE-20 Network adapter 3 <==> L1-BGP-UPLINK-02-19 Network adapter 4 <==> VM Network (not used)

In order to provide the uplink towards my physical network through VLAN 16 I first need to configure a VLAN 16 interface:

EDGE4 ! configure set interfaces ethernet eth1 vif 16 address 10.11.16.253/24 set service dns forwarding listen-on eth1.16 set service nat rule 5016 description "VLAN16 - 82.94.132.155" set service nat rule 5016 log disable set service nat rule 5016 outbound-interface eth0 set service nat rule 5016 source address 10.11.16.0/24 set service nat rule 5016 type masquerade set firewall group network-group LAN_NETWORKS network 10.11.16.0/24 commit save !

EDGE-16XG ! configure vlan database vlan 16 vlan name 16 NESTED-UPLINK exit do copy system:running-config nvram:startup-config !

Cisco 4849 ! conf t vlan 16 name NESTED-UPLINK exit end do copy run start !

Unify Network ! See screenshot below

NSX-T ! See screenshot below

The initial configuration of the Cumulus Routers is done through the vSphere (web) console. Once the eth0 interface is configured you can access the Router through SSH and do the rest of the configuration through SSH. We are going to do all the configuration using NCLU commands.

The default credentials are: Username: cumulus Password: CumulusLinux!

The configuration for the Cumulus Routers can be found below:

CLICK ON EXPAND ===> ON THE RIGHT ===> TO SEE THE OUTPUT (VX00 config) ===> :

VX00 ! cumulus@cumulus:~$ sudo vi /etc/network/interfaces ! auto eth0 iface eth0 address 192.168.12.21/24 gateway 192.168.12.1 cumulus@cumulus:~$ sudo ifreload -a =========================================================== net add interface eth0 ip address 192.168.12.21/24 net add interface eth0 ip gateway 192.168.12.1 ! net add hostname VX00 ! net pending net commit =========================================================== cumulus@cumulus:~$ sudo vi /etc/frr/daemons ! zebra=yes bgpd=yes ! cumulus@cumulus:~$ sudo systemctl enable frr.service cumulus@cumulus:~$ sudo systemctl start frr.service ! =========================================================== cumulus@cumulus:~$ sudo vi /etc/network/interfaces ! root # The loopback network interface auto lo iface lo inet loopback address 1.1.1.1/32 root # auto swp1 iface swp1 address 10.11.16.10/24 root # auto swp2 iface swp2 address 192.168.20.10/24 root # cumulus@cumulus:~$ sudo ifreload -a ! =========================================================== net add loopback lo ip address 1.1.1.1/32 net add interface swp1 ip address 10.11.16.10/24 net add interface swp2 ip address 192.168.20.10/24 ! net add bgp autonomous-system 65030 net add bgp router-id 1.1.1.1 ! root #net add bgp neighbor 10.11.16.253 remote-as 64512 net add bgp neighbor 192.168.20.11 remote-as 65031 net add bgp neighbor 192.168.20.12 remote-as 65032 ! net add bgp ipv4 unicast network 1.1.1.1/32 net add bgp ipv4 unicast redistribute connected ! net commit =========================================================== net show bgp summary net show bgp neighbors net show route bgp

CLICK ON EXPAND ===> ON THE RIGHT ===> TO SEE THE OUTPUT (VX01 config) ===> :

VX01 ! cumulus@cumulus:~$ sudo vi /etc/network/interfaces ! auto eth0 iface eth0 address 192.168.12.22/24 gateway 192.168.12.1 cumulus@cumulus:~$ sudo ifreload -a =========================================================== net add interface eth0 ip address 192.168.12.22/24 net add interface eth0 ip gateway 192.168.12.1 ! net add hostname VX01 ! net pending net commit =========================================================== cumulus@cumulus:~$ sudo vi /etc/frr/daemons ! zebra=yes bgpd=yes ! cumulus@cumulus:~$ sudo systemctl enable frr.service cumulus@cumulus:~$ sudo systemctl start frr.service ! =========================================================== cumulus@cumulus:~$ sudo vi /etc/network/interfaces ! root # The loopback network interface auto lo iface lo inet loopback address 2.2.2.2/32 root # auto swp1 iface swp1 address 192.168.20.11/24 root # auto swp2 iface swp2 address 192.168.18.1/24 root # cumulus@cumulus:~$ sudo ifreload -a ! =========================================================== net add loopback lo ip address 2.2.2.2/32 net add interface swp1 ip address 192.168.20.11/24 net add interface swp2 ip address 192.168.18.1/24 ! net add bgp autonomous-system 65031 net add bgp router-id 2.2.2.2 ! net add bgp neighbor 192.168.20.10 remote-as 65030 ! net add bgp ipv4 unicast network 2.2.2.2/32 net add bgp ipv4 unicast redistribute connected ! net commit =========================================================== net show bgp summary net show bgp neighbors net show route bgp

CLICK ON EXPAND ===> ON THE RIGHT ===> TO SEE THE OUTPUT (VX02 config) ===> :

VX02 ! cumulus@cumulus:~$ sudo vi /etc/network/interfaces ! auto eth0 iface eth0 address 192.168.12.23/24 gateway 192.168.12.1 cumulus@cumulus:~$ sudo ifreload -a =========================================================== net add interface eth0 ip address 192.168.12.23/24 net add interface eth0 ip gateway 192.168.12.1 ! net add hostname VX02 ! net pending net commit =========================================================== cumulus@cumulus:~$ sudo vi /etc/frr/daemons ! zebra=yes bgpd=yes ! cumulus@cumulus:~$ sudo systemctl enable frr.service cumulus@cumulus:~$ sudo systemctl start frr.service ! =========================================================== cumulus@cumulus:~$ sudo vi /etc/network/interfaces ! root # The loopback network interface auto lo iface lo inet loopback address 3.3.3.3/32 root # auto swp1 iface swp1 address 192.168.20.12/24 root # auto swp2 iface swp2 address 192.168.19.1/24 root # cumulus@cumulus:~$ sudo ifreload -a ! =========================================================== net add loopback lo ip address 3.3.3.3/32 net add interface swp1 ip address 192.168.20.12/24 net add interface swp2 ip address 192.168.19.1/24 ! net add bgp autonomous-system 65032 net add bgp router-id 3.3.3.3 ! net add bgp neighbor 192.168.20.10 remote-as 65030 ! net add bgp ipv4 unicast network 3.3.3.3/32 net add bgp ipv4 unicast redistribute connected ! net commit =========================================================== net show bgp summary net show bgp neighbors net show route bgp

After the configuration is done of the VX routers I verified if the BGP peering is working correctly and it is:

cumulus@VX00:mgmt:~$ net show bgp summary show bgp ipv4 unicast summary ============================= BGP router identifier 1.1.1.1, local AS number 65030 vrf-id 0 BGP table version 7 RIB entries 13, using 2392 bytes of memory Peers 2, using 41 KiB of memory Neighbor V AS MsgRcvd MsgSent TblVer InQ OutQ Up/Down State/PfxRcd VX01(192.168.20.11) 4 65031 44 44 0 0 0 00:01:43 3 VX02(192.168.20.12) 4 65032 36 36 0 0 0 00:01:20 3 Total number of neighbors 2 show bgp ipv6 unicast summary ============================= No BGP neighbors found show bgp l2vpn evpn summary =========================== No BGP neighbors found cumulus@VX00:mgmt:~$

cumulus@VX01:mgmt:~$ net show bgp summary show bgp ipv4 unicast summary ============================= BGP router identifier 2.2.2.2, local AS number 65031 vrf-id 0 BGP table version 7 RIB entries 13, using 2392 bytes of memory Peers 1, using 21 KiB of memory Neighbor V AS MsgRcvd MsgSent TblVer InQ OutQ Up/Down State/PfxRcd VX00(192.168.20.10) 4 65030 51 51 0 0 0 00:02:04 5 Total number of neighbors 1 show bgp ipv6 unicast summary ============================= No BGP neighbors found show bgp l2vpn evpn summary =========================== No BGP neighbors found cumulus@VX01:mgmt:~$

cumulus@VX02:mgmt:~$ net show bgp summary show bgp ipv4 unicast summary ============================= BGP router identifier 3.3.3.3, local AS number 65032 vrf-id 0 BGP table version 7 RIB entries 13, using 2392 bytes of memory Peers 1, using 21 KiB of memory Neighbor V AS MsgRcvd MsgSent TblVer InQ OutQ Up/Down State/PfxRcd VX00(192.168.20.10) 4 65030 49 49 0 0 0 00:01:58 5 Total number of neighbors 1 show bgp ipv6 unicast summary ============================= No BGP neighbors found show bgp l2vpn evpn summary =========================== No BGP neighbors found cumulus@VX02:mgmt:~$

Now that the Cumulus VX virtual machines have been configured correctly we are ready to create the terraform script in order to clone them properly.

The Terraform script can be found below:

❯ tree ├── main.tf ├── terraform.tfvars ├── variables.tf

terraform.tfvars

CLICK ON EXPAND ===> ON THE RIGHT ===> TO SEE THE OUTPUT (terraform.tfvars code) ===> :

vsphere_user = “administrator@vsphere.local” vsphere_password = “<my vCenter Server password>” vsphere_server = "vcsa-01.home.local" vsphere_datacenter = “HOME” vsphere_datastore = “vsanDatastore” vsphere_resource_pool = “Lab1” vsphere_network_01 = “L1-APP-MGMT11” vsphere_network_02 = “SEG-VLAN16” vsphere_network_03 = “L1-TRANSIT-LEAF-SPINE-20” vsphere_network_04 = “VM Network” root # vsphere_network_05 = “L1-BGP-UPLINK-01-18” vsphere_network_06 = “L1-BGP-UPLINK-02-19” root # vsphere_virtual_machine_template_vx00 = “VX00-template” vsphere_virtual_machine_name_vx00 = “l1-vx00” root # vsphere_virtual_machine_template_vx01 = “VX01-template” vsphere_virtual_machine_name_vx01 = “l1-vx01” root # vsphere_virtual_machine_template_vx02 = “VX02-template” vsphere_virtual_machine_name_vx02 = “l1-vx02”

variables.tf

CLICK ON EXPAND ===> ON THE RIGHT ===> TO SEE THE OUTPUT (variables.tf code) ===> :

root # vsphere login account. defaults to admin account variable “vsphere_user" { default = "administrator@vsphere.local" } root # vsphere account password. empty by default. variable “vsphere_password” { default = “<my vCenter Server password>” } root # vsphere server, defaults to localhost variable “vsphere_server” { default = “vcsa-01.home.local” } root # vsphere datacenter the virtual machine will be deployed to. empty by default. variable “vsphere_datacenter” {} root # vsphere resource pool the virtual machine will be deployed to. empty by default. variable “vsphere_resource_pool” {} root # vsphere datastore the virtual machine will be deployed to. empty by default. variable “vsphere_datastore” {} root # vsphere network the virtual machine will be connected to. empty by default. variable “vsphere_network_01” {} variable “vsphere_network_02” {} variable “vsphere_network_03” {} variable “vsphere_network_04” {} variable “vsphere_network_05” {} variable “vsphere_network_06” {} root # vsphere virtual machine template that the virtual machine will be cloned from. empty by default. variable “vsphere_virtual_machine_template_vx00” {} variable “vsphere_virtual_machine_template_vx01” {} variable “vsphere_virtual_machine_template_vx02” {} root # the name of the vsphere virtual machine that is created. empty by default. variable “vsphere_virtual_machine_name_vx00” {} variable “vsphere_virtual_machine_name_vx01” {} variable “vsphere_virtual_machine_name_vx02” {}

main.tf

CLICK ON EXPAND ===> ON THE RIGHT ===> TO SEE THE OUTPUT (main.tf code) ===> :

root # use terraform 0.11 provider “vsphere” { user = “${var.vsphere_user}" password = "${var.vsphere_password}" vsphere_server = “${var.vsphere_server}” allow_unverified_ssl = true } data “vsphere_datacenter” “dc” { name = “${var.vsphere_datacenter}” } data “vsphere_datastore” “datastore” { name = “${var.vsphere_datastore}" datacenter_id = "${data.vsphere_datacenter.dc.id}” } data “vsphere_resource_pool” “pool” { name = “${var.vsphere_resource_pool}” datacenter_id = “${data.vsphere_datacenter.dc.id}” } data “vsphere_network” “network_01” { name = “${var.vsphere_network_01}” datacenter_id = “${data.vsphere_datacenter.dc.id}” } data “vsphere_network” “network_02” { name = “${var.vsphere_network_02}” datacenter_id = “${data.vsphere_datacenter.dc.id}” } data “vsphere_network” “network_03” { name = “${var.vsphere_network_03}” datacenter_id = “${data.vsphere_datacenter.dc.id}” } data “vsphere_network” “network_04” { name = “${var.vsphere_network_04}” datacenter_id = “${data.vsphere_datacenter.dc.id}” } data “vsphere_network” “network_05” { name = “${var.vsphere_network_05}” datacenter_id = “${data.vsphere_datacenter.dc.id}” } data “vsphere_network” “network_06” { name = “${var.vsphere_network_06}” datacenter_id = “${data.vsphere_datacenter.dc.id}” } data “vsphere_virtual_machine” “template_vx00” { name = “${var.vsphere_virtual_machine_template_vx00}” datacenter_id = “${data.vsphere_datacenter.dc.id}” } data “vsphere_virtual_machine” “template_vx01” { name = “${var.vsphere_virtual_machine_template_vx01}” datacenter_id = “${data.vsphere_datacenter.dc.id}” } data “vsphere_virtual_machine” “template_vx02” { name = “${var.vsphere_virtual_machine_template_vx02}” datacenter_id = “${data.vsphere_datacenter.dc.id}” } resource “vsphere_virtual_machine” “cloned_virtual_machine_vx00” { name = “${var.vsphere_virtual_machine_name_vx00}” wait_for_guest_net_routable = false wait_for_guest_net_timeout = 0 resource_pool_id = “${data.vsphere_resource_pool.pool.id}” datastore_id = “${data.vsphere_datastore.datastore.id}” num_cpus = 2 memory = 1024 guest_id = “${data.vsphere_virtual_machine.template_vx00.guest_id}” network_interface { network_id = “${data.vsphere_network.network_01.id}” adapter_type = “${data.vsphere_virtual_machine.template_vx00.network_interface_types[0]}” } network_interface { network_id = “${data.vsphere_network.network_02.id}” adapter_type = “${data.vsphere_virtual_machine.template_vx00.network_interface_types[0]}” } network_interface { network_id = “${data.vsphere_network.network_03.id}” adapter_type = “${data.vsphere_virtual_machine.template_vx00.network_interface_types[0]}” } network_interface { network_id = “${data.vsphere_network.network_04.id}" adapter_type = "${data.vsphere_virtual_machine.template_vx00.network_interface_types[0]}” } disk { label = “disk0” size = “6” # unit_number = 0 } clone { template_uuid = “${data.vsphere_virtual_machine.template_vx00.id}” } } resource “vsphere_virtual_machine” “cloned_virtual_machine_vx01” { name = “${var.vsphere_virtual_machine_name_vx01}” wait_for_guest_net_routable = false wait_for_guest_net_timeout = 0 resource_pool_id = “${data.vsphere_resource_pool.pool.id}” datastore_id = “${data.vsphere_datastore.datastore.id}” num_cpus = 2 memory = 1024 guest_id = “${data.vsphere_virtual_machine.template_vx01.guest_id}” network_interface { network_id = “${data.vsphere_network.network_01.id}” adapter_type = “${data.vsphere_virtual_machine.template_vx01.network_interface_types[0]}” } network_interface { network_id = “${data.vsphere_network.network_03.id}” adapter_type = “${data.vsphere_virtual_machine.template_vx01.network_interface_types[0]}” } network_interface { network_id = “${data.vsphere_network.network_05.id}” adapter_type = “${data.vsphere_virtual_machine.template_vx01.network_interface_types[0]}” } network_interface { network_id = “${data.vsphere_network.network_04.id}” adapter_type = “${data.vsphere_virtual_machine.template_vx01.network_interface_types[0]}” } disk { label = “disk0” size = “6” # unit_number = 0 } clone { template_uuid = “${data.vsphere_virtual_machine.template_vx01.id}” } } resource “vsphere_virtual_machine” “cloned_virtual_machine_vx02” { name = “${var.vsphere_virtual_machine_name_vx02}” wait_for_guest_net_routable = false wait_for_guest_net_timeout = 0 resource_pool_id = “${data.vsphere_resource_pool.pool.id}” datastore_id = “${data.vsphere_datastore.datastore.id}” num_cpus = 2 memory = 1024 guest_id = “${data.vsphere_virtual_machine.template_vx02.guest_id}” network_interface { network_id = “${data.vsphere_network.network_01.id}” adapter_type = "${data.vsphere_virtual_machine.template_vx02.network_interface_types[0]}” } network_interface { network_id = “${data.vsphere_network.network_03.id}” adapter_type = “${data.vsphere_virtual_machine.template_vx02.network_interface_types[0]}” } network_interface { network_id = “${data.vsphere_network.network_06.id}” adapter_type = “${data.vsphere_virtual_machine.template_vx02.network_interface_types[0]}” } network_interface { network_id = “${data.vsphere_network.network_04.id}” adapter_type = “${data.vsphere_virtual_machine.template_vx02.network_interface_types[0]}” } disk { label = “disk0” size = “6” # unit_number = 0 } clone { template_uuid = “${data.vsphere_virtual_machine.template_vx02.id}” } }

Validate your code:

ihoogendoor-a01:#Test iwanhoogendoorn$ tfenv use 0.12.24 [INFO] Switching to v0.12.24 [INFO] Switching completed ihoogendoor-a01:Test iwanhoogendoorn$ terraform validate

Plan your code:

ihoogendoor-a01:Test iwanhoogendoorn$ terraform plan

Execute your code to implement the Segments:

ihoogendoor-a01:Test iwanhoogendoorn$ terraform apply

When the segments need to be removed again you can revert the implementation:

ihoogendoor-a01:Test iwanhoogendoorn$ terraform destroy

This Terraform script will clone the three virtual machines with the IP addresses and BGP peer preconfigured.